LCMUX

Status: Proposal (as of 24.01.2025)

LCMUX (Logical Channel Multiplexing) is a transport protocol to allow transmitting several, logically independent streams of data over a single, reliable underlying data stream.

There exist several multiplexing protocols with this goal already, as well as countless of their higher-level cousins, the RPC protocols. Most of these tag messages with ids to distinguish which logical data stream the messages belong to, and call it a day. We argue that this mostly misses the point.

The difficult part is not to partition messages into disjoint streams; the difficult part is to ensure independent processing of those streams on a shared pool of resources (network, CPU-time, main memory). Multiplexed streams must remain logically independent11Giving a precise notion of logical independence is difficult, but intuitively, traffic on some data stream should neither cause unbounded delays nor dropping of messages on another data stream. despite their competition for limited physical resources. Hence, the design of LCMUX starts from considerations of resource management.

Multiplexing and Resources

Suppose we want to send many individual messages or requests over a single, fifo communication channel. If processing a message takes a long time, it makes little sense to wait for the message to be fully processed before accessing the next message from the communication channel — especially if the next message can (or must) be processed independently from the first one. Instead, one usually moves the first message elsewhere in memory,This trades time usage for space usage. Time is dynamic and ephemeral, whereas space is comparatively static and reusable. This simplification is what makes the approach we describe here feasible. freeing up the communication channel and allowing to pop the next message almost immediately. The actual, slow message processing can then be done by another thread or via non-blocking IO.

Unfortunately, memory is finite, there is a limit on how many messages can be copied elsewhere. Leaving messages in the buffer of the underlying communication channel would induce delays on processing independent successive messages. Crashing the process would also affect the processing of independent successive messages. The only acceptable solution is to drop messages that cannot be moved to a buffer.

Since we must allow a receiver to drop incoming messages, the receiver must inform the sender which messages got processed, and which got dropped. A sender can adjust its rate of sending according to that feedback. Sound familiar? We are reinventing TCP from first principles22And any multiplexing protocol which doesn't reinvent TCP should have good, explicitly documented reasons..

Transmitting a message only to have it dropped by the receiver is rather inefficient33Spending bandwidth on a message that gets ignored on delivery is obviously wasteful. Less obviously, the sender must devote computational resources to the contingency that every message it sends might be dropped (for example, a retransmission buffer).. LCMUX goes beyond what TCP can do by allowing the receiver to communicate how much buffer space it has left, acting as a promise that as many bytes of messages will not be dropped44Moving this concept to TCP would not make sense: preemptive acknowledgements would require a reliable underlying transport channel.. This can allow for operation where no messages are ever dropped. Such systems are often called “credit-based”.

While never sending messages that end up dropped conserves bandwidth usage, it introduces latency: the sender might need to wait for notifications of buffer capacity. Hence, LCMUX does allow for optimistic sending of messages, messages which then might end up being dropped if buffer capacity at the time of receiving is insufficient.

There are two equally valid viewpoints of LCMUX: as an acknowledgement-based system with preemptive acknowledgements, or as a credit-based system with speculative sending. Our remaining presentation takes the latter viewpoint: we first establish a system for communicating messages that can be sent without any danger of dropping, and then extend it with optimistic sending and failure notification.

Requirements

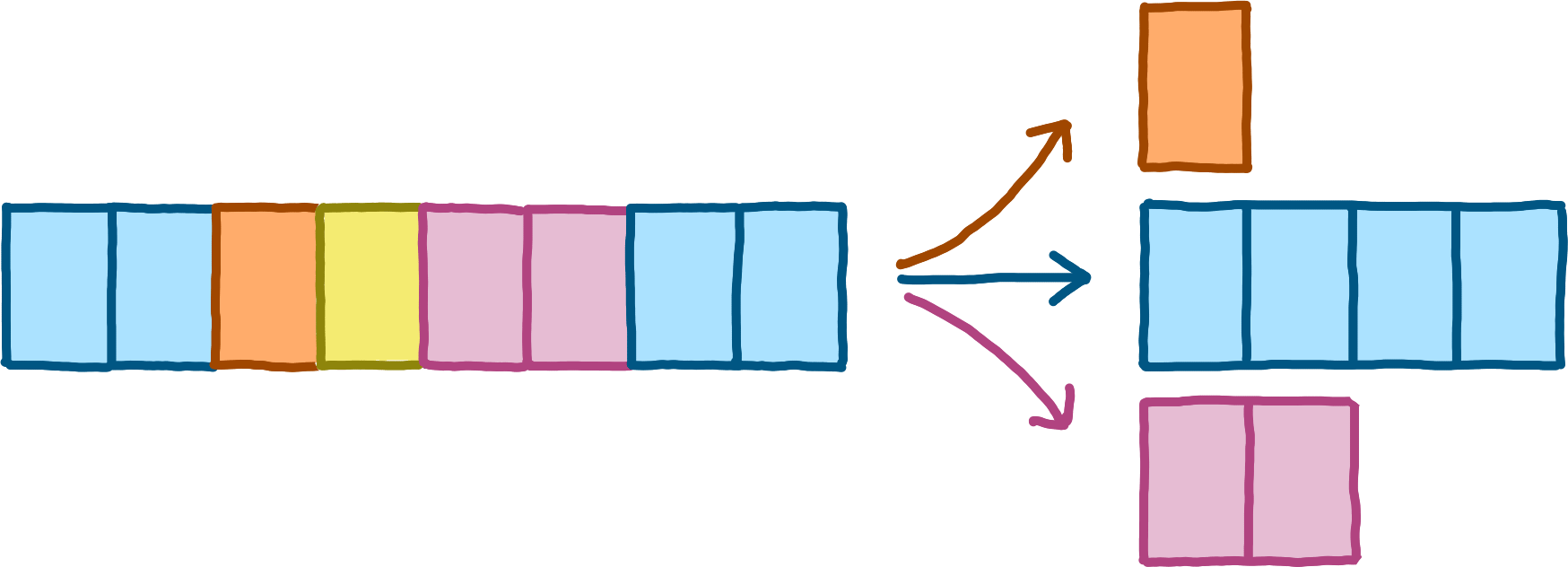

To describe LCMUX more precisely, we first need to introduce some terminology. One peer — the client — whishes to send messages to the other peer — the server. Some messages can be reliably processed by the server without ever requiring buffering; these messages we call global messages. Messages that might require buffering are called channel messages. Each channel message belongs to exactly one logical channel. Many different (kinds of) messages may belong to the same logical channel. For each logical channel, the server maintains a fifo buffer, into which it immediately moves all corresponding channel messages upon arrival.

We now list some properties we need our multiplexing protocol to fulfil.

The server should be able to inform the client about its remaining buffer capacities for its logical channels, and keep these values up to date as it buffers and processes (thus freeing buffer space) channel messages. The client should be able to rely on this information: if the server promises buffer capability, it must deliver.

The server should be able to resize its buffers to cope with competing resource demands. Increasing buffer capacity is unproblematic, but we will see that decreasing buffer capacity requires cooperation with the client in some situations.

Both the client and the server should be able to voluntarily impose and communicate limits on how many bytes of channel messages they will at most send or receive over a logical channel in the future. Once that limit reaches zero, the other peer can consider the channel as being closed.

Finally, the client should be able to optimistically send channel messages even though their corresponding buffer might be full — by the time the messages arrive at the server, the buffer space might have become available after all. The server must be able to reject and drop such optimistically transmitted messages, however. When it does, it must inform the client, because the client always needs to maintain accurate (if delayed) information about all buffer states.

Solution Overview

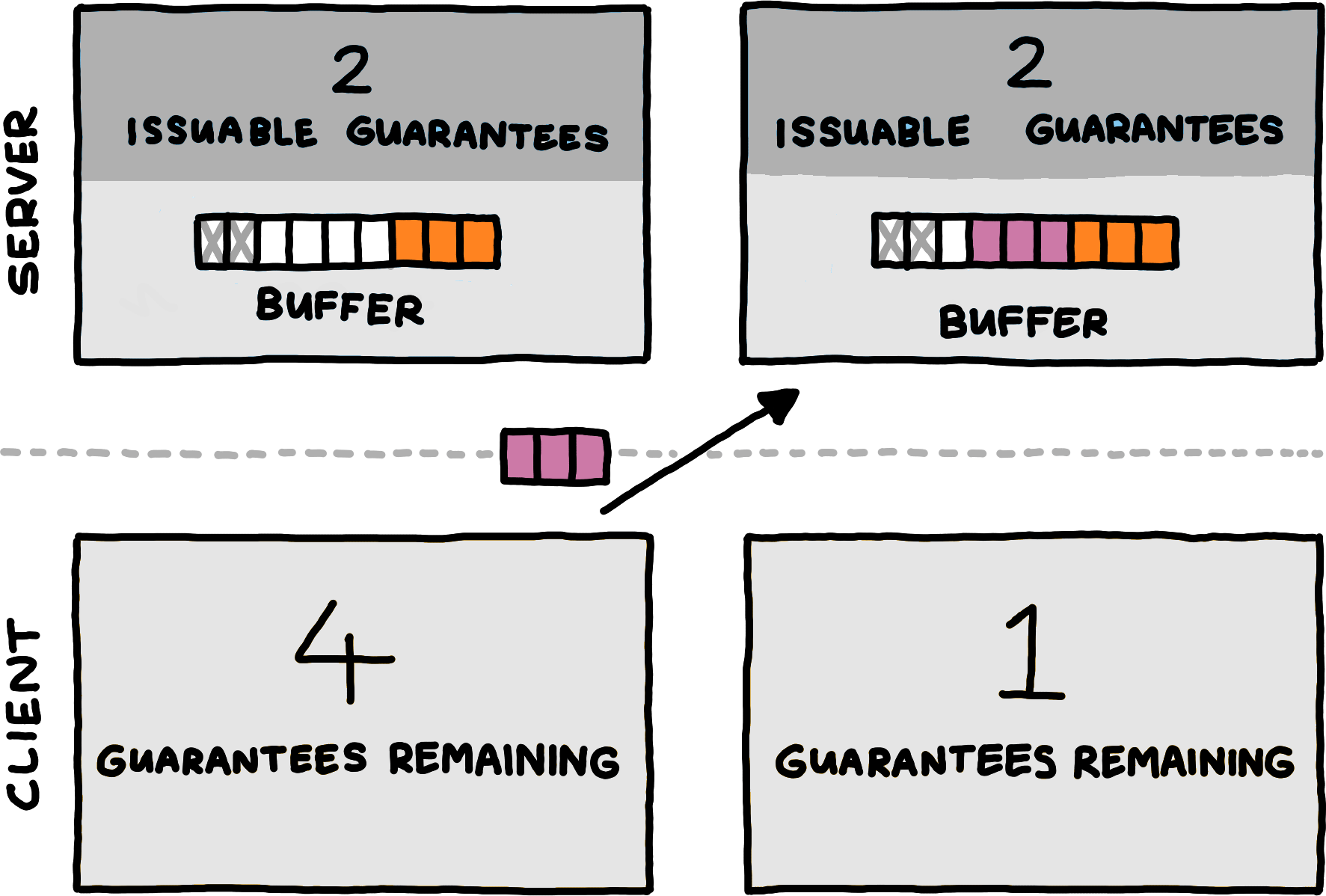

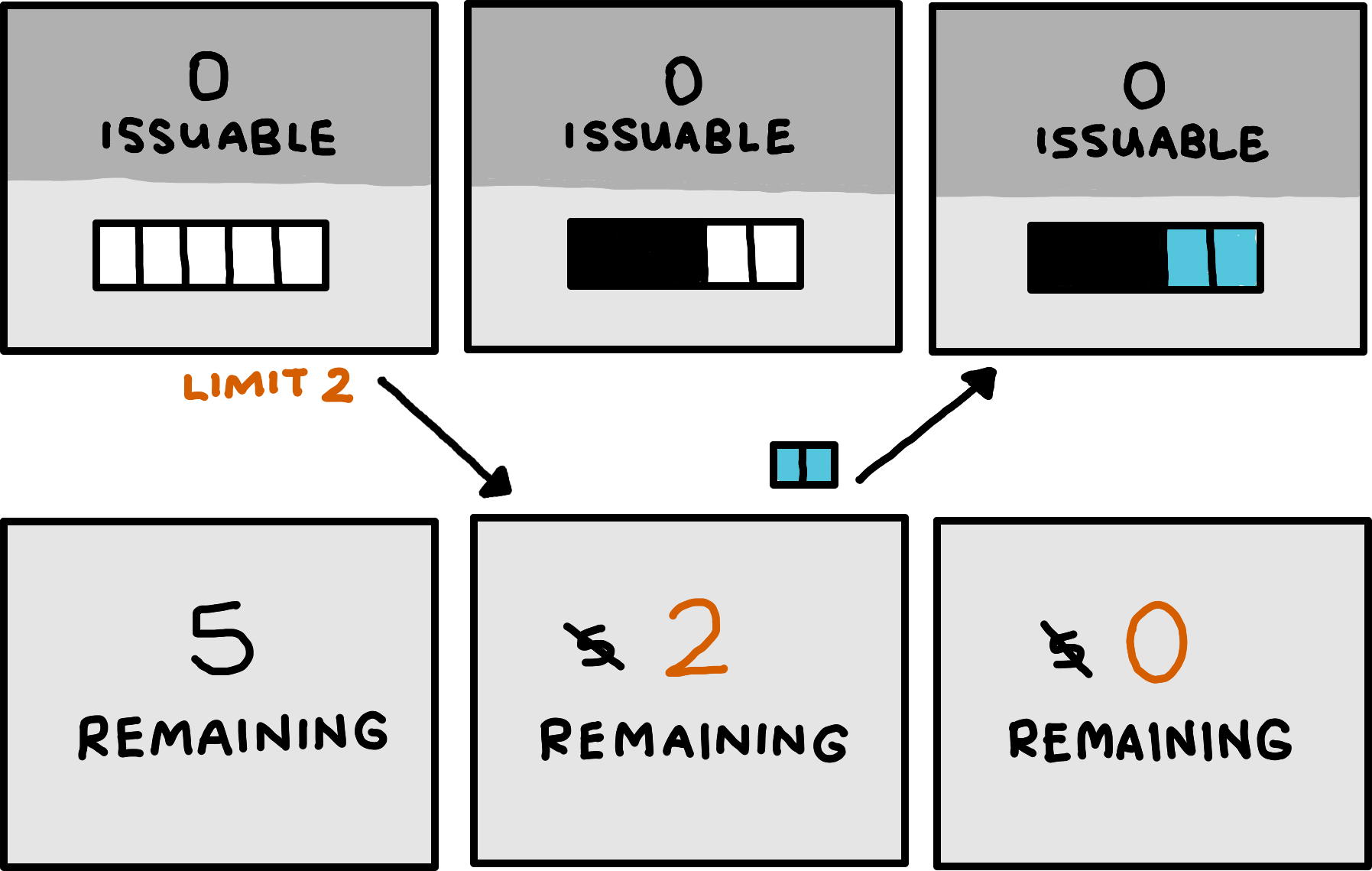

To satisfy these requirements, our solution builds on the concept of guarantees. The server sends per-logical channel guarantees of available buffer space to the client; the client tracks its available guarantees and knows that all of its channel messages that do not exceed the available guarantees for their logical channel will be buffered.

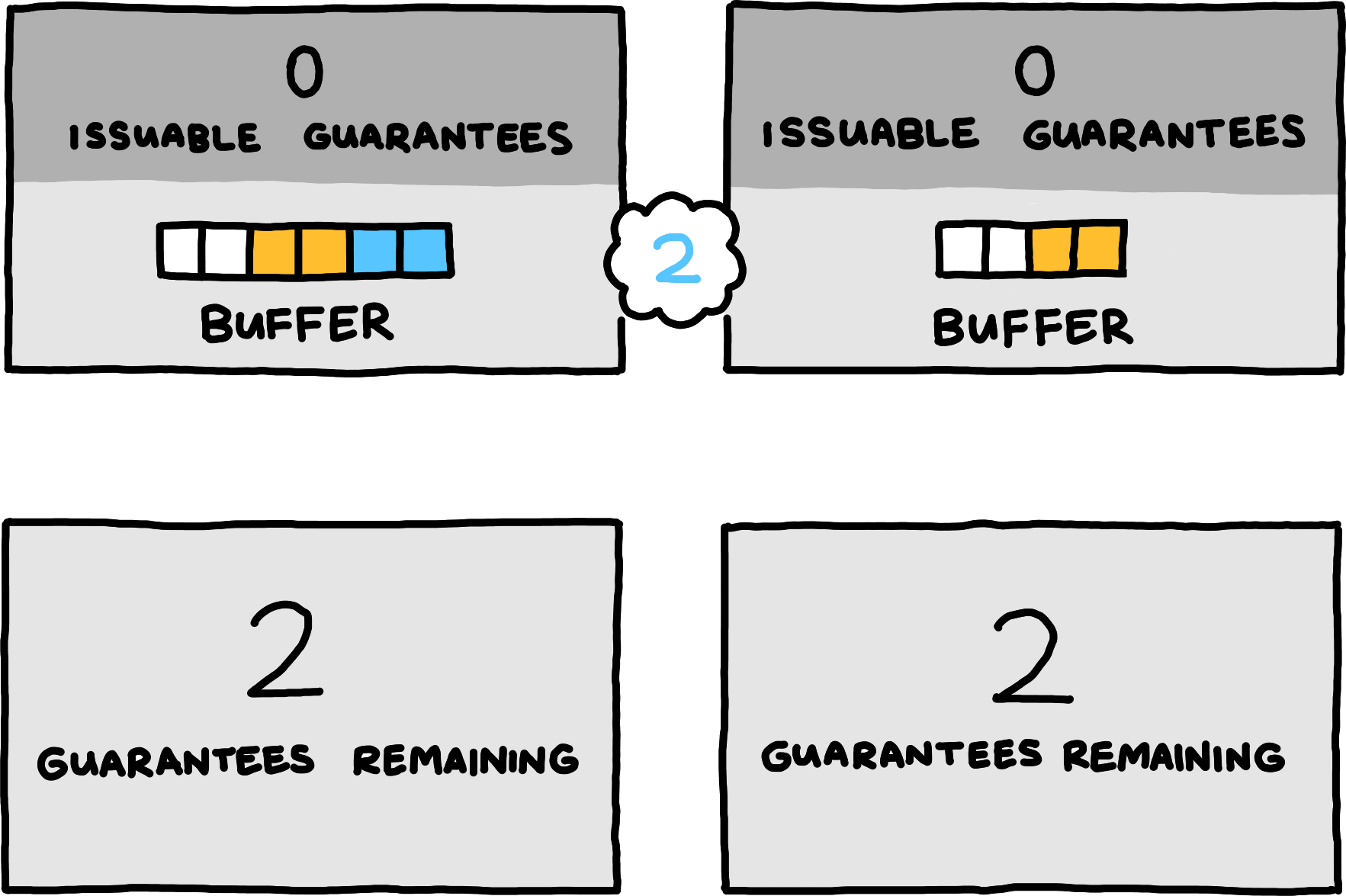

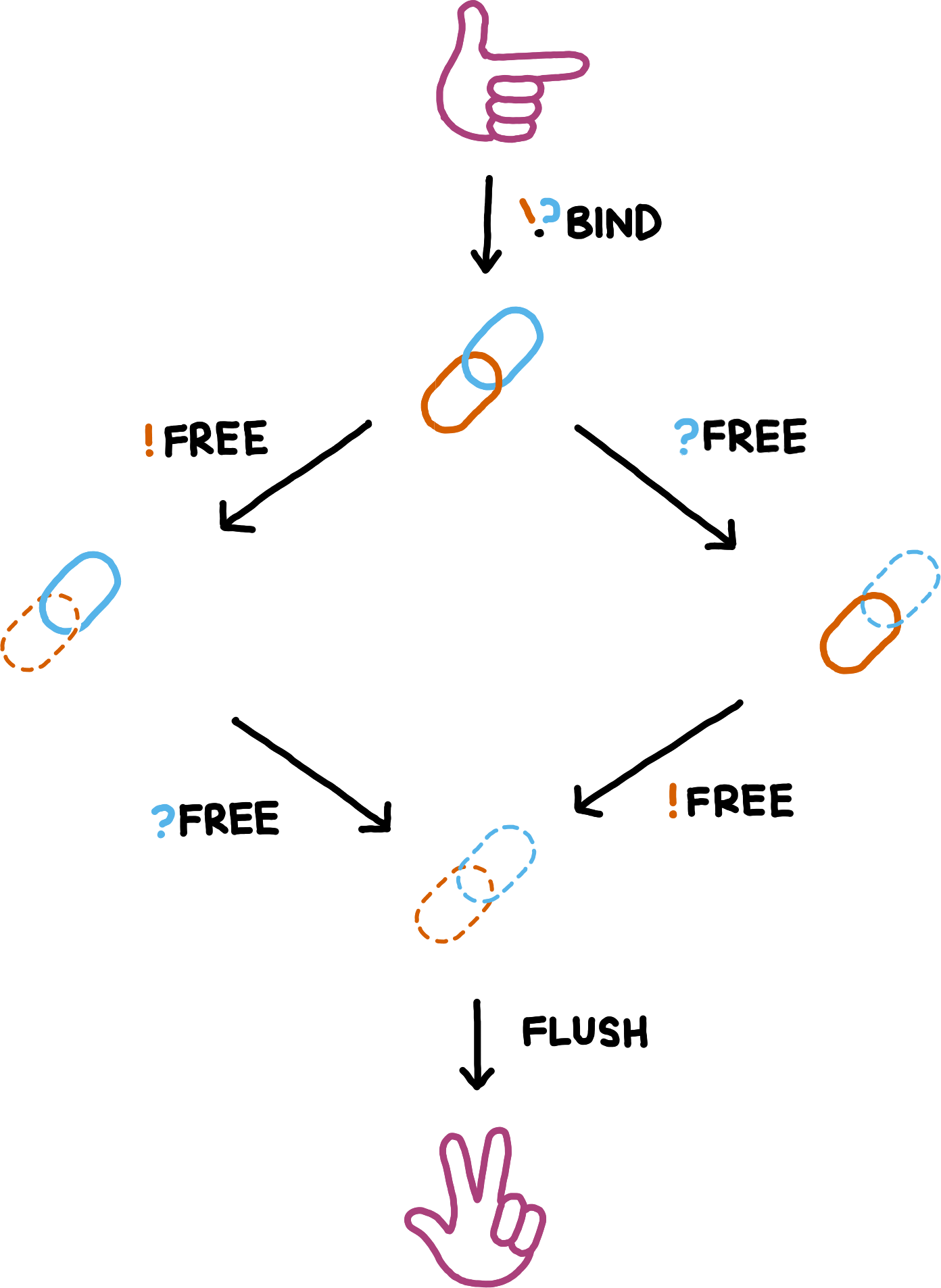

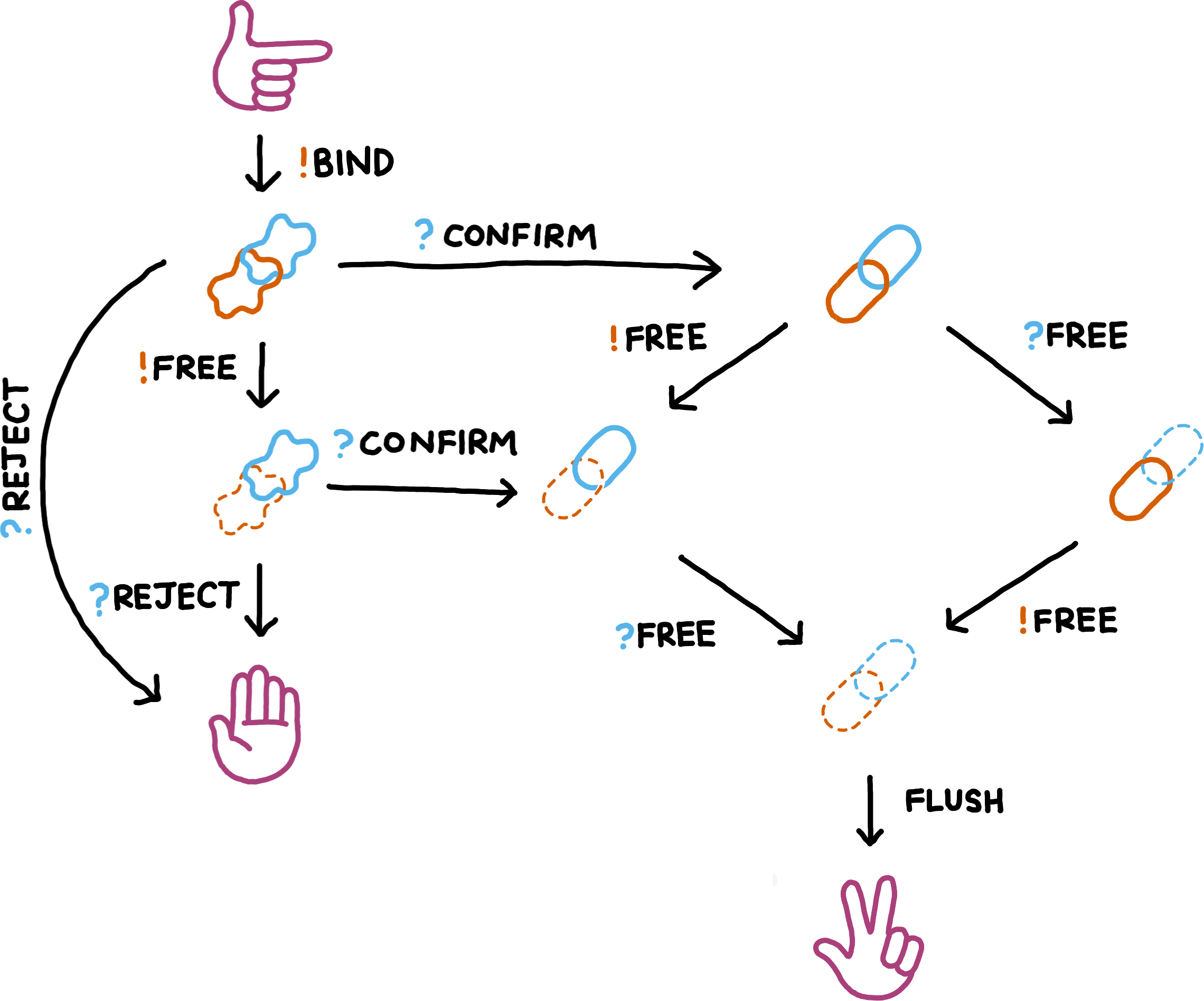

This diagram shows the statekeeping for only a single logical channel. The full session state consists of an independent copy for every different logical channel a protocol uses.

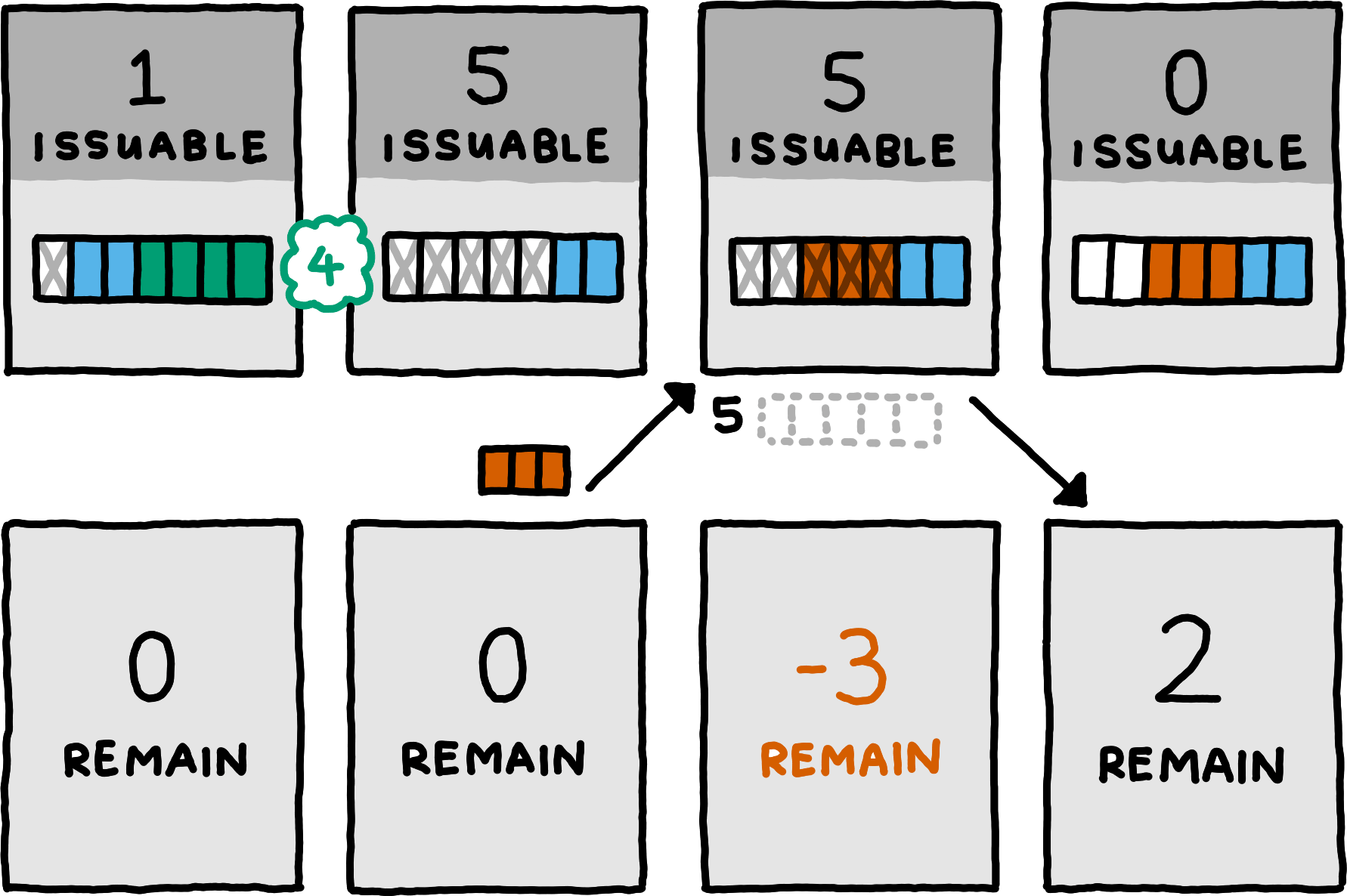

This diagram shows the statekeeping for only a single logical channel. The full session state consists of an independent copy for every different logical channel a protocol uses.When the server increases a buffer’s capacity, it gives that many guarantees (measured in bytes) for the corresponding logical channel to the client. When establishing the connection, the client has no guarantees, and the server typically starts by sending guarantees equal to its initial buffer capacities. Conceptually, the server begins its operation by increasing its buffer capacities from zero to their actual starting amounts.

The second way of giving guarantees occurs when the server has processed a buffered channel message and thus frees up buffer space. It then communicates the amount of freed buffer space, and for which logical channel. The server need not communicate this immediately, it is free to send only the occasional update that aggregates guarantees that stem from processing several channel messages from the same logical channel.

When the server wishes to reduce the capacity of some buffer, it can simply process messages from that buffer without informing the client. This decreases the overall amount of guarantees in the system by the correct amount.

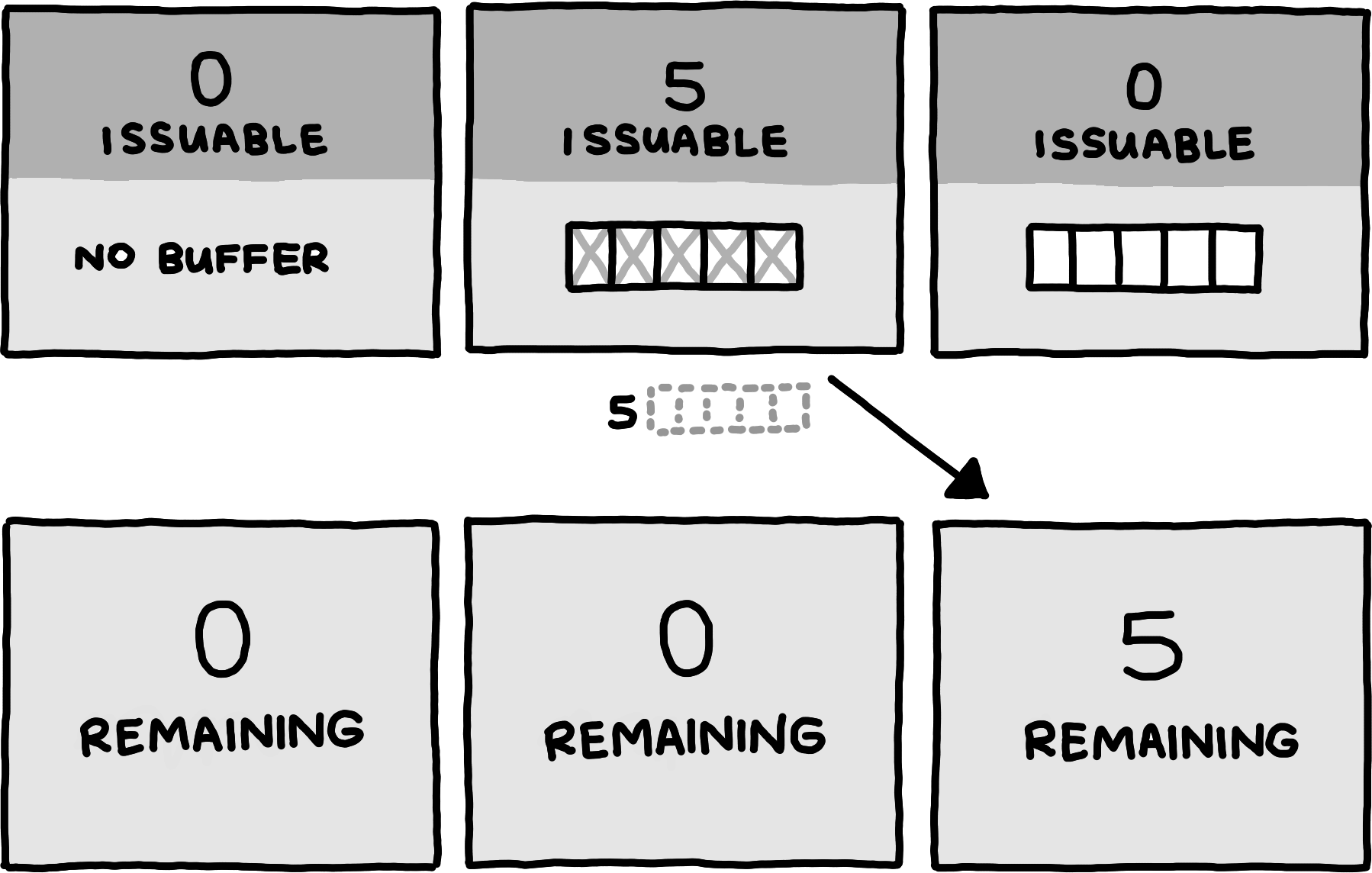

This technique is only applicable when the server has some buffered messages; it does not allow it to reduce buffer capacity for empty buffers. But the server cannot simply decrease the buffer size and then inform the client when a buffer is empty: while that information is travelling to the client, the client might send messages on this logical channel, fully expecting them to be buffered by the server.

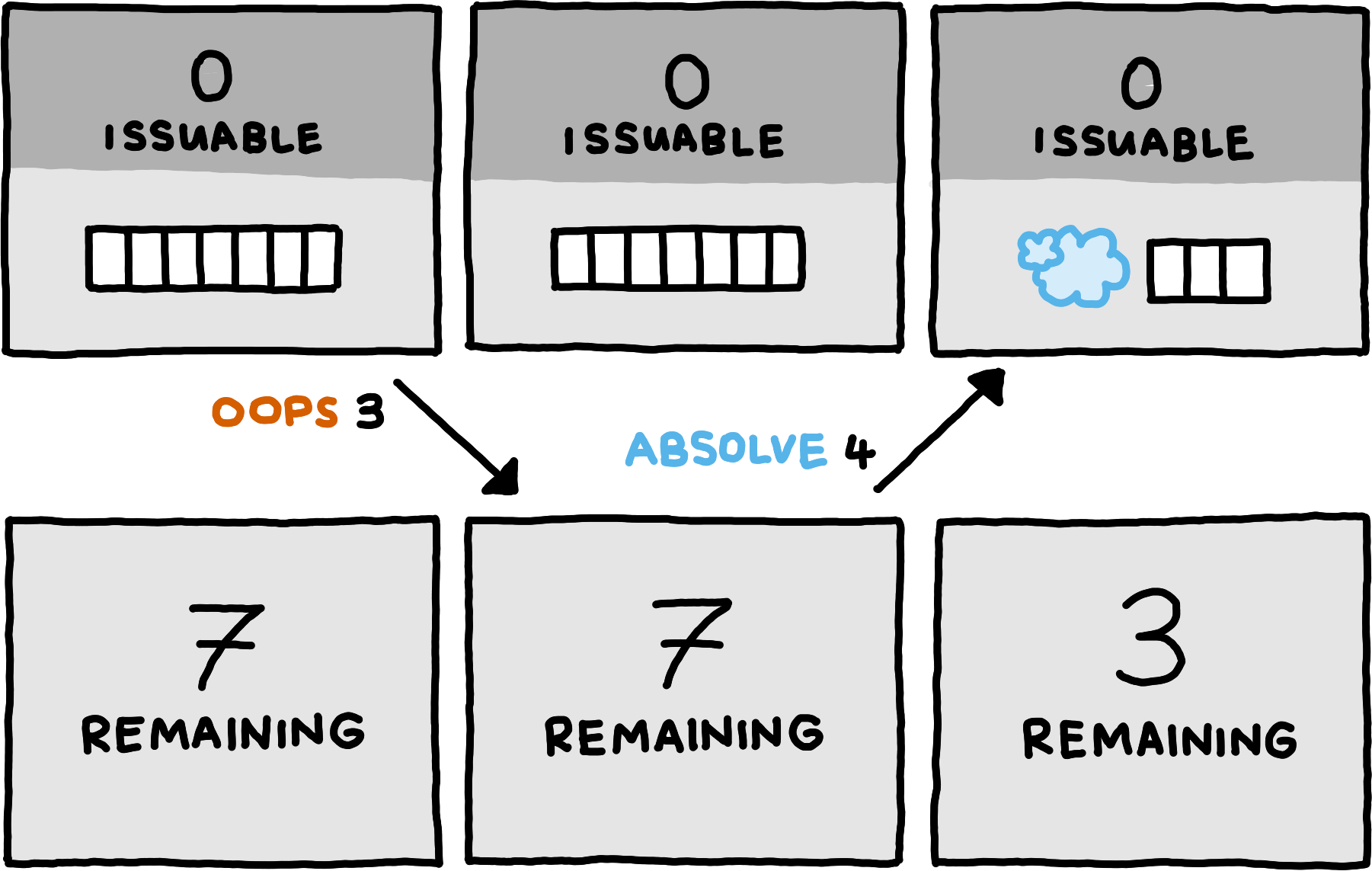

To solve this problem, we introduce a mechanism for the client to absolve the server of some absolute amount of its unused guarantees on some logical channel, and we add a way for the server to ask for such absolution. Asking for absolution takes the form of specifying the logical channel and the number of guarantees the server would like the client to keep. Upon receiving this request, the client absolves the server of exactly the amount needed to reach the desired number of guarantees. If the client already has fewer guarantees by the time the request for absolution arrives, the client simply ignores the request.

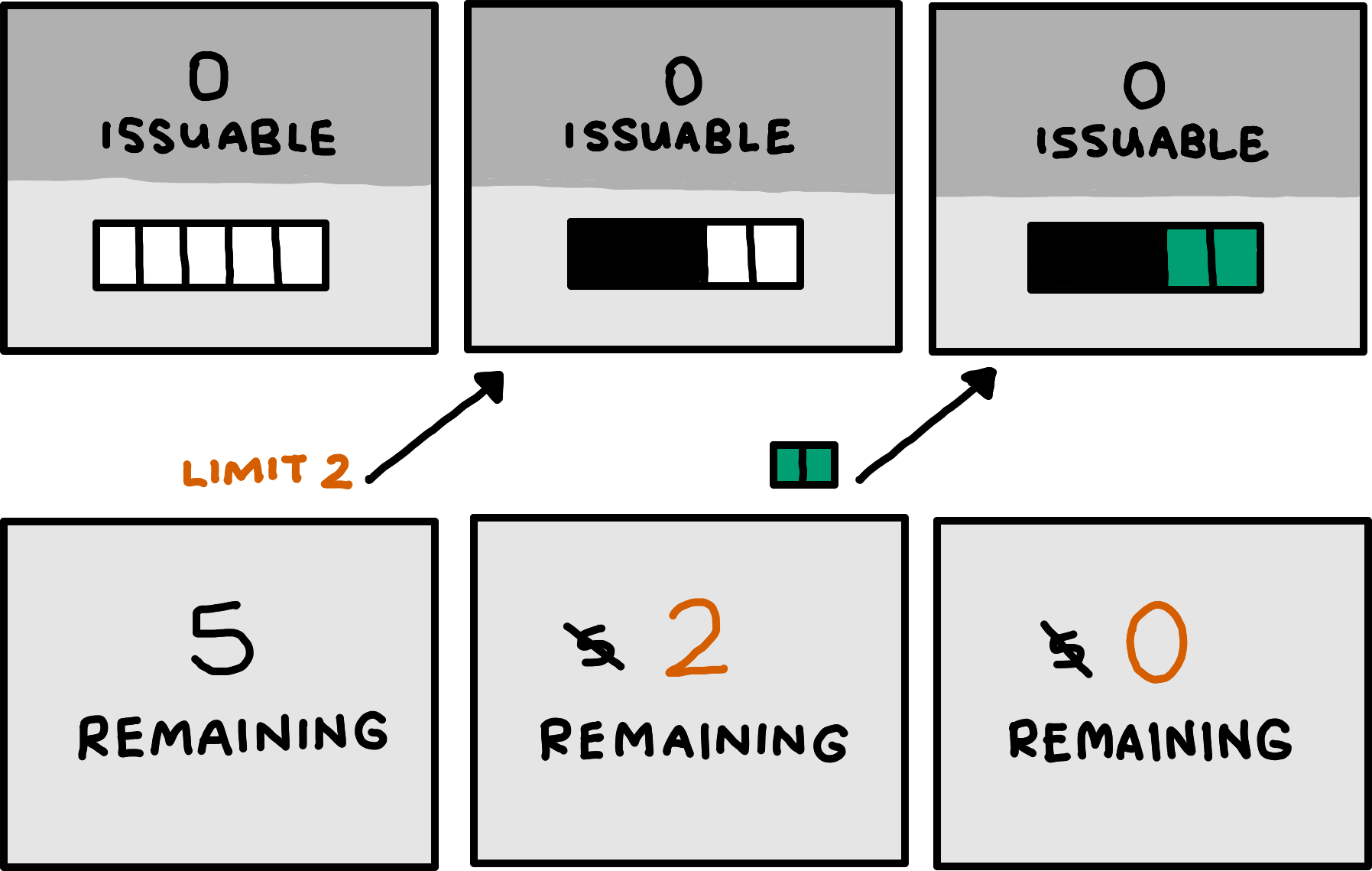

Next, we consider the ability to close logical channels. More precisely, we consider a generalization of closing: the communication of an upper bound on future usage.

At any point, the client may communicate an upper bound on how many bytes of channel messages it wants to communicate over a logical channel in the future. Both peers can track this number and subtract from it whenever the server accepts (i.e., receives and does not drop) bytes accross the logical channel, or when the client provides absolution for it. The client must not send any messages that could turn the tracked number negative. The client may send new upper bounds over time, but only if they strictly tighten the limit. Any bounds that would allow the client to send more bytes than promised in a previous bound are forbidden.

Fully analogously, the server may communicate an upper bound on how many more bytes of messages it will accept over a logical channel in the future. After accepting that many bytes, all future bytes must be dropped. In particular, this bound thus also gives an upper bound on how many more guarantees the server might issue for that logical channel in the future. The server may communicate new upper bounds over time, but only if they stictly tighten the limit.

Taken together, these techniques make for a stable system where the client never overwhelms the buffer capacity of the server. As a final addition, however, we want to allow the client to optimistically send data even without corresponding guarantees. The server may, after all, have freed up buffer space by the time the optimistically transmitted channel messages arrive.

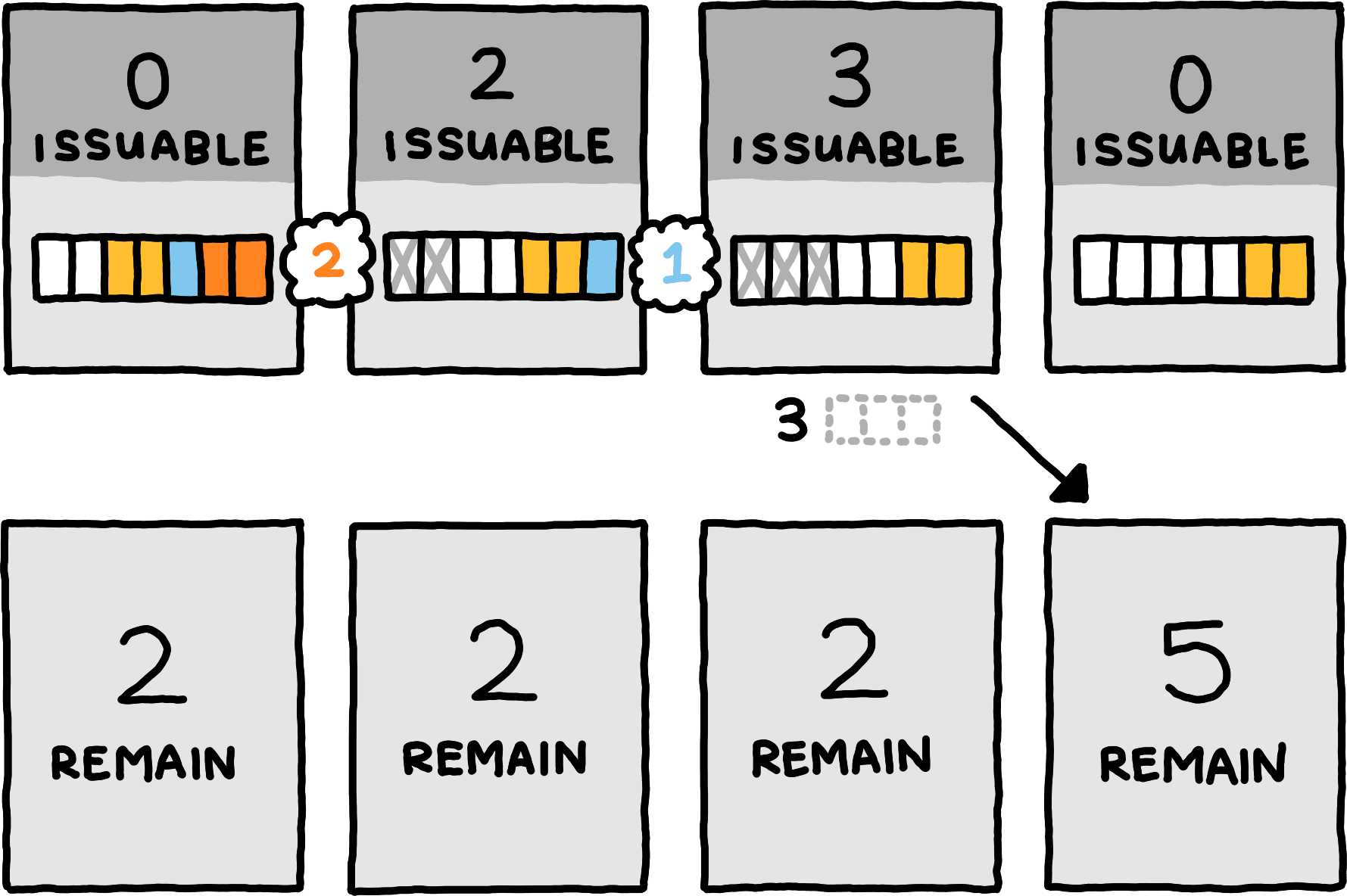

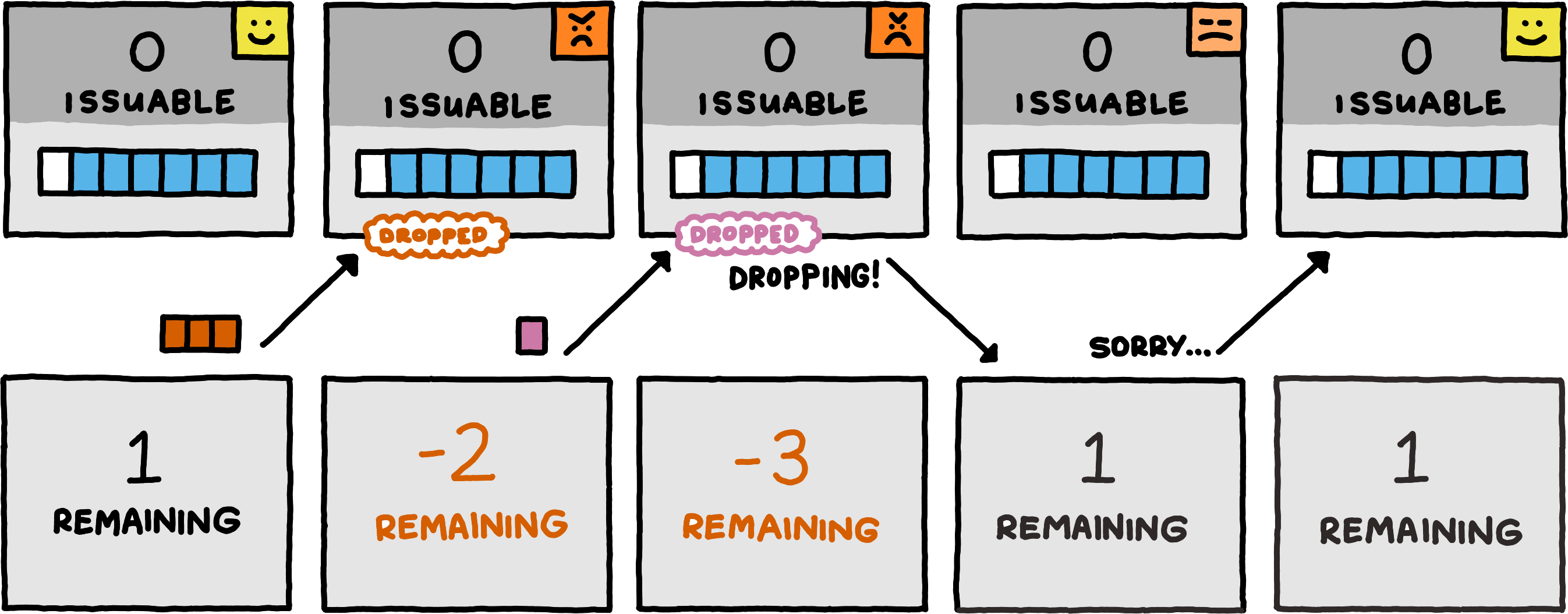

If the server has insufficient buffer capacity when an optimistically transmitted message arrives, the server drops it without any processing. We must add feedback mechanism to the protocol, since the client must be informed of the message dropping.

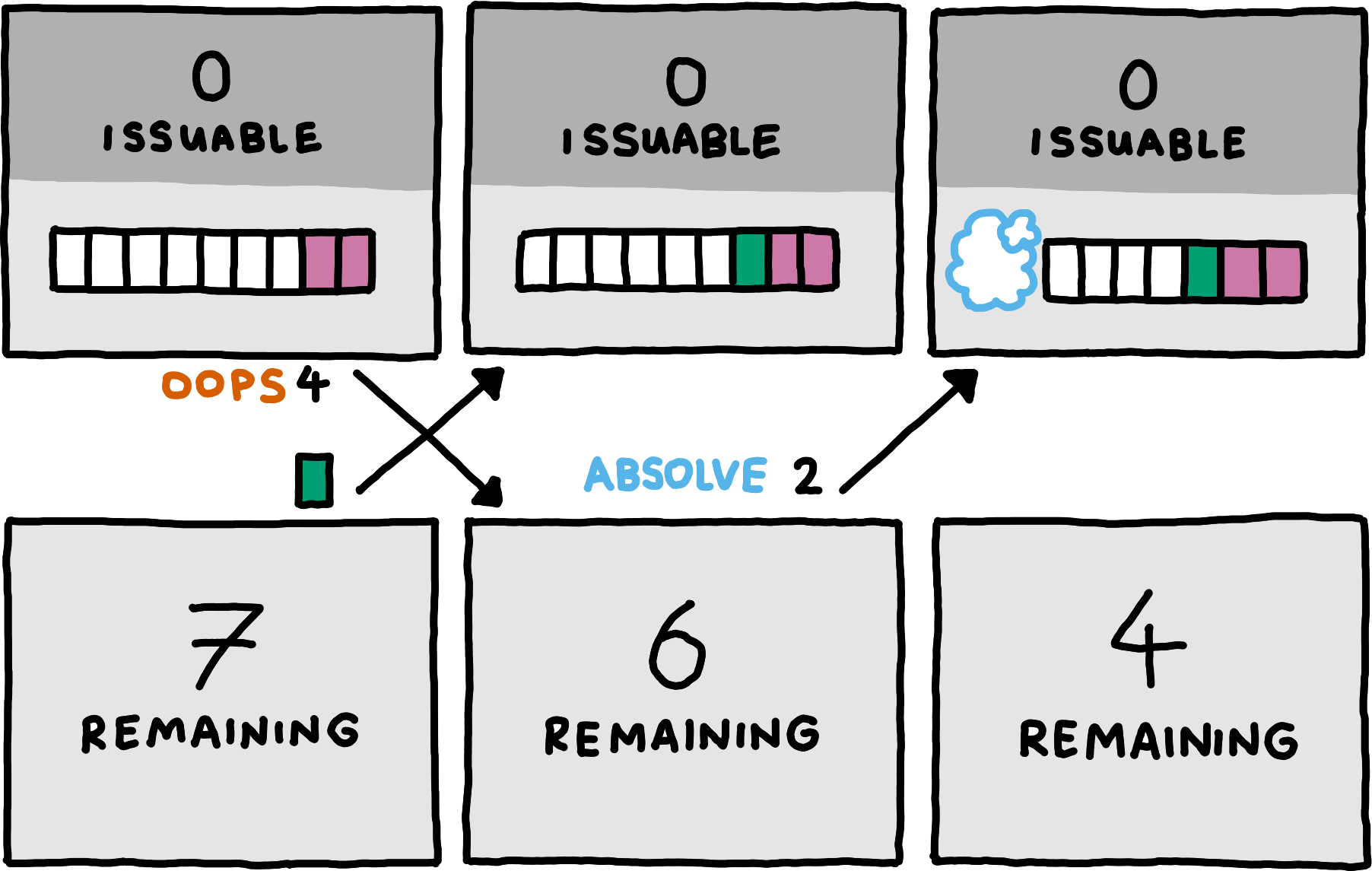

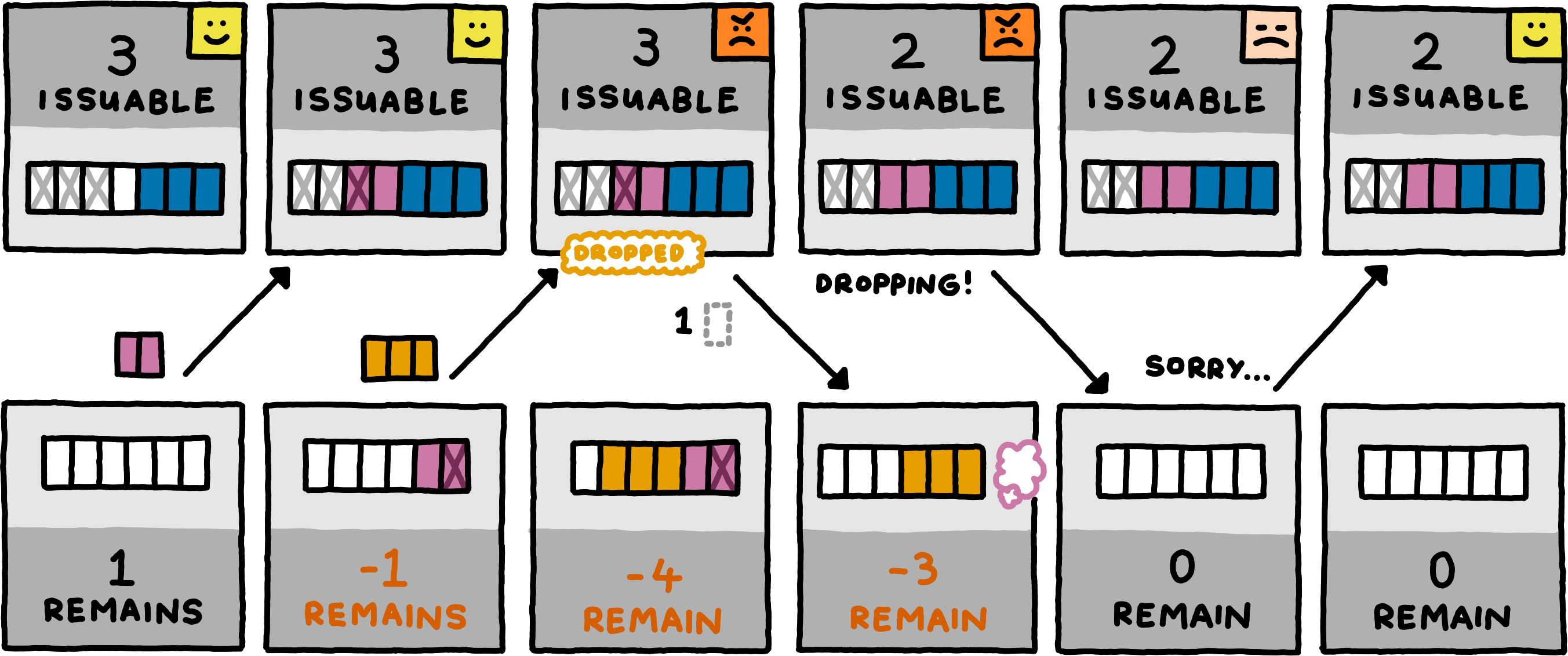

To keep the complexity of the feedback mechanism minimal, we adopt a simplistic solution: when the server drops an optimistically55The server is not allowed to drop messages for which it had previously guaranteed buffer capacity. transmitted channel message, it must keep dropping all channel messages on the same logical channel, until it receives an apology for that logical channel from the client. When the server starts dropping messages on some logical channel, it must notify the client. After receiving the notification, the client can send an apology, knowing that the server will revert back to normal operation for that logical channel after receiving it.

This approach comes with two subtleties. First, the server must never buffer partial messages — if it can buffer all but one byte of a message, it must still drop the full message and all subsequent ones until the apology. Second, the server must issue guarantees for all the optimistic message bytes that it did manage to buffer, before informing the client that it has started to drop messages. This is necessary so that the client can reconstruct exactly which of its messages got dropped, and which still made it through.

In this example, the client empties its queue, whereas a realistic implementation would probably keep the queue to (transparently) retransmit all dropped messages before sending new ones. Note further that the server had to issue at least one guarantee before sending the dropping notification, but it would also have been allowed to issue more guarantees — a realistic server would have issued all three issuable guarantees.

When a server preemptively issues guarantees, the client can defer flow control fully to the server by never sending optimistically, eliminating the need to buffer messages for retransmission. This behaviour would lead to a deadlock however with a server that uses guarantees as acknowledgements only.Some servers might implement a credit-based scheme and preemptively issue guarantees, whereas other servers might use guarantees purely as a retroactive acknowledgement mechanism and expect their clients to send optimistically. Some servers might even have different policies for different logical channels. A client that knows which behaviour to except can adjust its own behaviour accordingly.

We prose an informal, non-normative coordination mechanism: by default, clients should assume that they need to send optimistically. On each logical channel on which a server wants to commit to handling flow control via preemptive guarantees, it should issue zero guarantees as its first action related to that channel.

LCMUX Specification

Having introduced all these concepts and terminology, we can now define how LCMUX expresses them over a reliable, ordered, byte-oriented, bidirectional communication channel. LCMUX allows multiplexing global messages and channel messages on up to logical channels.

LCMUX is a message-oriented protocol. To avoid confusion with global messages and channel messages, the units of information transfer in LCMUX are called frames. Some frames encapsulate global messages or channel messages, while others transmit metadata only.

There are nine different kinds of frames. The first four are sent by the server to the client:

}The server kindly asks the client to send an AbsolveFrame such that the client’s remaining guarantees will be target (or less) for the channel channel.}The server promises to the client that after processing (i.e., receiving and not dropping) bound ( or possibly fewer) bytes of channel messages on the channel channel, it will drop all future channel message bytes on that channel.}The server notifies the client that it has started dropping channel messages on the channel channel, and that it will continue to do so until receiving an ApologiseFrame from the client. The server must issue any outstanding guarantees for channel before sending this frame.}The remaining five kinds of frames are sent by the client to the server.

}}The client promises to the server that after the server has processed (i.e., received and not dropped) bound (or possibly fewer) bytes of channel messages on the channel channel, the client will not send any more channel message bytes on that channel.}}}Note that the bytes in a SendGlobalFrame must correspond exactly to one full message of a higher-level protocol. The bytes in a SendChannelFrame in contrast need not form a full message of a higher-level protocol; LCMUX can be used to transparently fragment and interleave arbitrarily large higher-level channel messagesGlobal messages cannot be fragmented this way because then decoding would require memory allocation, which in turn would imply a need for resource control. by splitting their transmission over several frames (or it may even send multiple small channel messages in a single SendChannelFrame).

An LCMUX session consists of both peers exchanging frames, encoded with the encoding relations we define next. Note that the codes of frames sent by a client and frames sent by a server are disjoint and can be distinguished by their first byte. This allows both peers to take on both roles — a typical LCMUX-based higher-level protocol runs two LCMUX sessions with reversed roles concurrently over a single underlying transport channel.

Server Frame Encodings

We define an encoding relation EncodeIssueGuaranteesFrame for IssueGuaranteesFrame. The codes in EncodeIssueGuaranteesFrame for any IssueGuaranteesFrame val are the bytestrings that are concatenations of the following form:

| Bits | Big-Endian Bitfield |

|---|---|

| 0 – 3 | The bitstring 1111. |

| 4 – 7 | A tag of width for val.channel. |

| The corresponding compact U64 encoding for bits 4 – 7. | |

| A tag of width for val.amount, followed by the corresponding compact U64 encoding. | |

We define an encoding relation EncodePleadFrame for PleadFrame. The codes in EncodePleadFrame for any PleadFrame val are the bytestrings that are concatenations of the following form:

| Bits | Big-Endian Bitfield |

|---|---|

| 0 – 3 | The bitstring 1110. |

| 4 – 7 | A tag of width for val.channel. |

| The corresponding compact U64 encoding for bits 4 – 7. | |

| A tag of width for val.target, followed by the corresponding compact U64 encoding. | |

We define an encoding relation EncodeLimitReceivingFrame for LimitReceivingFrame. The codes in EncodeLimitReceivingFrame for any LimitReceivingFrame val are the bytestrings that are concatenations of the following form:

| Bits | Big-Endian Bitfield |

|---|---|

| 0 – 3 | The bitstring 1101. |

| 4 – 7 | A tag of width for val.channel. |

| The corresponding compact U64 encoding for bits 4 – 7. | |

| A tag of width for val.bound, followed by the corresponding compact U64 encoding. | |

We define an encoding relation EncodeAnnounceDroppingFrame for AnnounceDroppingFrame. The codes in EncodeAnnounceDroppingFrame for any AnnounceDroppingFrame val are the bytestrings that are concatenations of the following form:

| Bits | Big-Endian Bitfield |

|---|---|

| 0 – 3 | The bitstring 1100. |

| 4 – 7 | A tag of width for val.channel. |

| The corresponding compact U64 encoding for bits 4 – 7. | |

Client Frame Encodings

We define an encoding relation EncodeAbsolveFrame for AbsolveFrame. The codes in EncodeAbsolveFrame for any AbsolveFrame val are the bytestrings that are concatenations of the following form:

| Bits | Big-Endian Bitfield |

|---|---|

| 0 – 3 | The bitstring 1011. |

| 4 – 7 | A tag of width for val.channel. |

| The corresponding compact U64 encoding for bits 4 – 7. | |

| A tag of width for val.amount, followed by the corresponding compact U64 encoding. | |

We define an encoding relation EncodeLimitSendingFrame for LimitSendingFrame. The codes in EncodeLimitSendingFrame for any LimitSendingFrame val are the bytestrings that are concatenations of the following form:

| Bits | Big-Endian Bitfield |

|---|---|

| 0 – 3 | The bitstring 1010. |

| 4 – 7 | A tag of width for val.channel. |

| The corresponding compact U64 encoding for bits 4 – 7. | |

| A tag of width for val.bound, followed by the corresponding compact U64 encoding. | |

We define an encoding relation EncodeApologiseFrame for ApologiseFrame. The codes in EncodeApologiseFrame for any ApologiseFrame val are the bytestrings that are concatenations of the following form:

| Bits | Big-Endian Bitfield |

|---|---|

| 0 – 3 | The bitstring 1001. |

| 4 – 7 | A tag of width for val.channel. |

| The corresponding compact U64 encoding for bits 4 – 7. | |

We define an encoding relation EncodeSendGlobalFrame for SendGlobalFrame. The codes in EncodeSendGlobalFrame for any SendGlobalFrame val are the bytestrings that are concatenations of the following form:

| Bits | Big-Endian Bitfield |

|---|---|

| 0 – 3 | The bitstring 1000. |

| 4 – 7 | A tag of width for val.length. |

| The corresponding compact U64 encoding for bits 4 – 7. | |

| The raw bytes of val.content. | |

We define an encoding relation EncodeSendChannelFrame for SendChannelFrame. The codes in EncodeSendChannelFrame for any SendChannelFrame val are the bytestrings that are concatenations of the following form:

| Bits | Big-Endian Bitfield |

|---|---|

| 0 | The bitstring 0. |

| 1 – 3 | A tag of width for val.length. |

| 4 – 7 | A tag of width for val.channel. |

| The corresponding compact U64 encoding for bits 4 – 7. | |

| The corresponding compact U64 encoding for bits 1 – 3. | |

| The raw bytes of val.content. | |

Resource Handles

While this section is not part of the LCMUX specification proper, it does inform implementation, particularly implementations to be useful for Willow.In this section, we discuss how to implement a common special case of resource control on top of LCMUX: that of maintaining numeric identifiers for shared context between two peers. For example, a peer might send a large piece of data, give it a numeric identifier, and then reference the data through its identifier rather than repeatedly including the data in future messages.

Depositing data like this with the other peer requires them to spend resources (memory), so immediately questions of resource control and throttling arise. The freeing of bound resources also introduces some concurrency concerns. We first sketch a lightweight approach to maintaining data handles without considering resource control, and then show how to implement that approach on top of logical channels such that resource concerns are addressed.

We consider a client who asks a server to bind some data to numeric resource handles. The same protocol might use multiple types of data, each with its own independent handle types (and independent resource control).

Since both the client and the server must keep track of all resource handles, they should both be able to recover resources by removing bindings. We call this operation freeing a resource handle.

Note that we only discuss resource handles that live during a particular protocol run between two peers. We do not consider the problem of persisting peer-specific state across sessions.

Managing Resource Handles

We use consecutive natural numbers as resource handles. For each handle type, both peers keep track of the least number that has not yet been assigned as a handle of that type. In order to bind a value to a resource handle, the client simply transmits the data and its type. The least number that had not been assigned before becomes the resource handle of the transmitted data. Both peers add the new mapping into some data structure they can use to later dereference the resource handle into its value.

The main issue in maintaining resource handles is that of coordinating freeing over an asynchronous communication channel. Suppose one peer removes a handle-to-value binding from their local mapping, sends a message to inform the other peer, but that peer has concurrently sent some message that pertains to the resource handle in question. Everything breaks!

Hence, freeing is a multi-phase process. When Alfie wants to free a resource handle, he sends a global message to inform his peer (Betty). This message acts as a promise that Alfie will not reference that resource handle in any of his future messages. He does not locally delete the handle yet, however. Betty, upon receiving the message, should send her own message in return, which will act as a promise that she will not reference the resource handle in her future messages either.

When a peer has both sent and received a message to free a resource handle, it still cannot remove the data from memory: it might still have buffered some older messages on some logical channel that reference the resource handle. Only once it knows that no more old messages reference the resource handle can it release the data.

To this end, every peer counts how many messages it sends that refer to any one resource handle. When a peer whishes to free a handle, it includes its (then final) reference count with that message. Similarly, every peer counts how many messages it receives that pertain to any one resource handle. Only once that count matches the received reference countin the freeing message does it release the bound data.

Because it helps to have consistent terminology, we give explicit names to the distinct states in the process of freeing.

- Initially, a resource handle is FullyBound.

- After a peer sends a message asking to free a FullyBound resource handle, that peer marks it as MeFreed.

- When a peer receives a message asking to free a FullyBound resource handle, that peer marks it as YouFreed

- Once both peers have sent their messages for freeing, a MeFreed or YouFreed resource handle is AwaitingDeletion.

- Once a peer knows for sure that it will not process any old messages that reference a resource handle that is AwaitingDeletion in the future, the resource handle is FullyDeleted. This state typically requires no explicit representation in memory, because the peer would actually release the storage.

!) and receiving (?) of messages. FLUSH indicates the moment the peer knows that the resource handle cannot be referenced in the future.Resource Control

Resource handle allocation requires peers to use some of their finite pool of memory. Managing this resource involves exactly the same considerations as LCMUX logical channels do. This allows for a simple — at least in principle — solution: for each handle type, use a dedicated logical channel for the messages that bind those resource handles. The issuing of guarantees can correspond to the memory available for resource handles of that handle type.

In practice, this turns out to be slightly more complicated than one might hope. First off, moving the incoming messages for binding resource handles into a buffer makes little sense, since guarantees should correspond to actual resource handles storage space, not the space for buffering merely the instructions for allocation of resource handles. LCMUX implementations should be flexible enough to immediately process these incoming messages, allocating resource handles storage, and using that for driving the issuing of guarantees.

When resource handles are to be stored in a data structure that allows for efficient queries on all stored handles, predicting available storage space for proactively issuing guarantees can be difficult or impossible. It might be possible to provide conservative estimates66At the cost of more complicated data structure implementations., but most peers will simply not issue guarantees, using them only as acknowledgements for optimistically transmitted handle-binding messages.

Optimistic binding of resource handles complicates the state space of each individual binding: receiving guarantees gives confirmation that a binding can be worked with, but receiving an AnnounceDroppingFrame means that the binding has to be discarded.

The following additional states are possible:

- When binding a resource handles optimistically, it starts out HopefullyBound; sufficient guarantees lead to a transition to FullyBound.

- A peer can request freeing of a resource handle that it itself had bound optimistically, the resulting state is called HopefullyMeFreed. Suffcient guarantees lead to a transition to MeFreed.

- An AnnounceDroppingFrame for a HopefullyBound or HopefullyMeFreed indicates that the resource handle was never bound it the first place, it got Dropped. Implementations probably do not need to represent this variant explicitly.

After optimistically binding a resource handle, a peer may immediately reference that handle in its subsequent messages, without waiting for the acknowledging guarantees. If the resource handle could not be bound by the other peer, those messages become meaningless. Hence, every peer simply discards all incoming messages that reference resource handles that are not bound at that point in time77The whole system design ensures that messages are never discarded if the sender followed all rules and the optimistically bound resource handle did actually get processed by the receiver.. This applies both to application-level messages and to messages for freeing an optimistically bound resource handle. The soundness of this approach depends on handle creation messages to not be buffered, but to be processed immediately, despite technically belonging to a logical channel.

Senders that do not wish to incorporate complex retransmission logic into their implementation can simply issue their resource handles optimistically, and then wait for acknowledging guarantees (i.e., a transistion to the FullyBound state) before actually working with the handles.